Google releases their Syntactic Parser Open Source

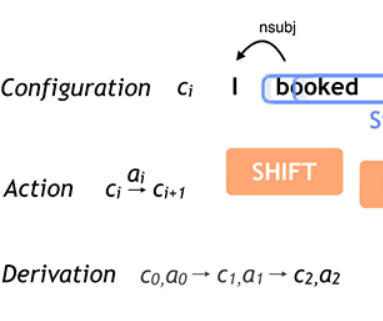

Google researchers spend a lot of time thinking about how computer systems can read and understand human language in order to process it in intelligent ways. On May, 12, 2016 Slav Petrov (expertise) based in New York and leading the machine learning for natural language group (Slav Petrov Page), announced that they released SyntaxNet as an open-source neural network framework implemented in TensorFlow that provides a new foundation for Natural Language Understanding (NLU) . The release includes all code needed to train new SyntaxNet models on own data, as well as Parsey McParseface, an English parser that the Googlers have trained and that can be used to analyze English text. Parsey McParseface is built on powerful machine learning algorithms that learn to analyze the linguistic structure of language, and that can explain the functional role of each word in a given sentence.

Read more:

https://googleresearch.blogspot.co.at/2016/05/announcing-syntaxnet-worlds-most.html

Literature:

Andor, D., Alberti, C., Weiss, D., Severyn, A., Presta, A., Ganchev, K., Petrov, S. & Collins, M. 2016. Globally normalized transition-based neural networks. arXiv preprint arXiv:1603.06042.

Petrov, S., Mcdonald, R. & Hall, K. 2016. Multi-source transfer of delexicalized dependency parsers. US Patent 9,305,544.

Weiss, D., Alberti, C., Collins, M. & Petrov, S. 2015. Structured Training for Neural Network Transition-Based Parsing. arXiv:1506.06158.

Vinyals, O., Kaiser, Ł., Koo, T., Petrov, S., Sutskever, I. & Hinton, G. Grammar as a foreign language. Advances in Neural Information Processing Systems, 2015. 2755-2763.