“Mathematicians/Statisticians would like to turn humans into computers –

AI/Machine Learnerns would like to turn computers into humans.

Our HCAI mission is to combine both humans and computers”

Our Mission:

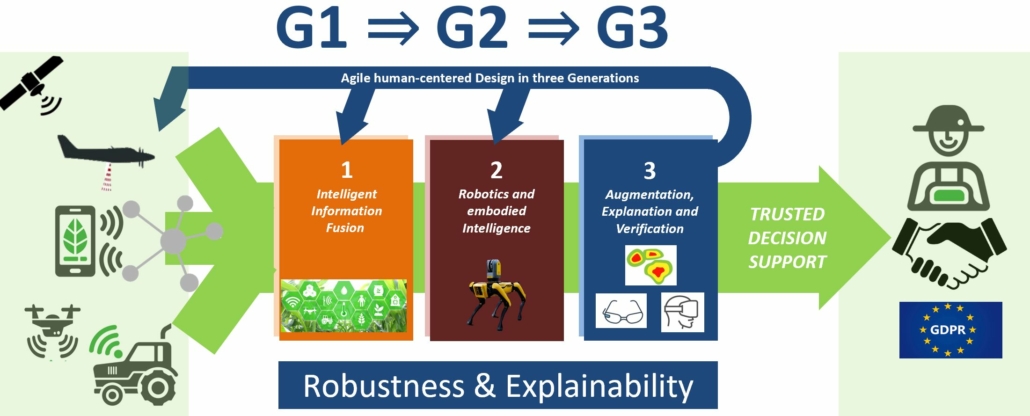

Our central “Ding” is Robustness & Explainability. In research area (1) we fuse different sources of data from various sensors for multimodal learning from graphs, in research area (2) we bring in “intelligence” in cyber-physical systems, and in (3) we provide augmentation, explanation and verification to enable re-traceability and interpretability of the results for trustworthy AI and decision support algined with social, ethical and legal requirements! In Generation G1 we work with existing technology to enable rapid solutions to pressing problems (low-hanging fruits), in Generation G2 we work on modifications of G1, and in G3 we work on cutting-edge technologies beyond state-of-the-art to contribute to the international research community.

Please watch the inaugural lecture: https://human-centered.ai/antrittsvorlesung-andreas-holzinger

Our Background:

Artificial Intelligence (AI) is now on everybodys lips due to the great success of machine learning (e.g. Chat-GPT). The use of AI in domains that impact human life (agriculture, climate, forestry, one health, …) has led to an increased demand for trust in AI. The Human-Centered AI Lab (HCAI) is working on generic methods to promote robustness and explainability to foster trustworthy AI solutions and advocates a synergistic approach to provide human control over AI and to align AI with human values, ethical principles, and legal requirements to ensure safe and fair human-machine interaction.

The dramatic newspaper and television headlines about the increasing danger of forest fires, the threat to our forests from pests (e.g. bark beetle) and climate change, with the simultaneous importance of wood as a renewable resource underline the urgency of our issues and need for urgent help from the AI domain.

The HCAI lab is currently focusing on Multi-Objective Counterfactual Explanations with a human-in-the-loop to help achieve Forestry 5.0. In the first step, the lab is concentrating on concrete applications from forestry, in particular forest roads (dynamic prediction of trafficability, optimization of planning), as well as cable yarding in steep terrain (autonomous systems, optimization of route planning, reduction of fuel consumption, simulators for training) and energy autonomy of cyber-physical systems.

We always address these three sustainability goals:

1) Occupational safety = SDG 3 Good Health and Wellbeing

2) Economy = SDG 12 Responsible Consumption and Production = Ensure sustainable consumption and production patterns

3) Ecology = SDG 15 Life on Land = Protect, restore and promote sustainable use of terrestrial ecosystems, sustainably manage forests, combat desertification, and halt and reverse land degradation and halt biodiversity loss

Technically, we concentrate on these three main topics:

1) Robotics > embodied intelligence, human-in-the-loop > Explainability

2) 3D Point Cloud Data > Graph Neural Networks > Explainability/Counterfactuals

3) Transformer (z.B. TabPFN) > Causal inference/learning > Explainability/Counterfactuals

From Machine Learning to Human-Centered AI

- We work on data driven artificial intelligence (AI) and machine learning (ML) promoting a synergistic approach of Human-Centered AI (HCAI) to augment human intelligence with machine intelligence.

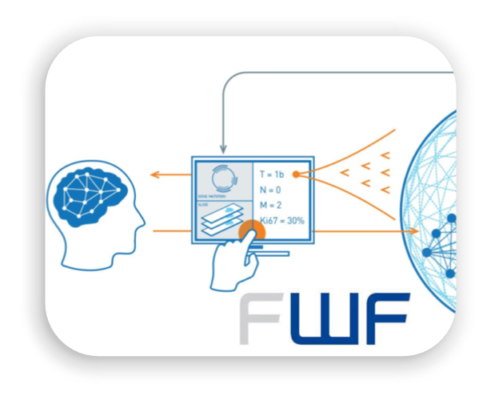

- One focus is on explainable AI (TEDx) and interpretable ML, where our pioneer work on interactive ML (iML, video, paper) with a human-in-the-loop and our experience in the application area health is very beneficial.

- Our goal is to enable a human experts to understand the underlying explanatory factors, the causality, of why an AI-decision has been made, paving the way towards verifiable machine learning and ethical responsible AI.

We speak Python!

We welcome new lab members at all levels, see open jobs: https://human-centered.ai/open-positions

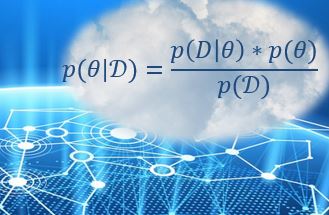

- To reach a level of usable intelligence we need to learn from prior data, extract knowledge, generalize, fight the curse of dimensionality, disentangle underlying explanatory factors of data, i.e. to understand the data in the context of an application domain (see > Research statement).

- This needs an international concerted effort (see > Expert network) and education of a new kind of graduates (see > Teaching statement). Cross-domain approaches foster serendipity, cross-fertilize methodologies and insights, and ultimately transfer ideas into Business for the benefit of humans (see > Conference CD-MAKE).

Contact

Prof. Dr. Andreas HOLZINGER

E-Mail: andreas.holzinger AT human-centered.ai

LinkedIN: https://www.linkedin.com/in/andreas-holzinger

Twitter: https://twitter.com/aholzin

Personal Web: https://www.aholzinger.at

Youtube Channel: https://goo.gl/XGwOmt

Scholar: https://scholar.google.com