Apr, 14, 2015 Seminar Talks Deep Learning

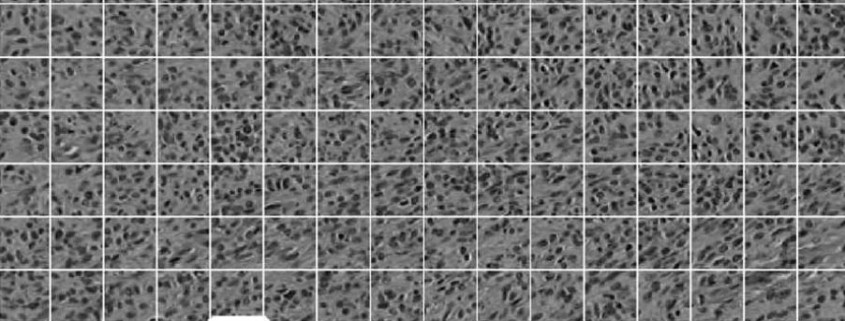

Title: Using Deep Learning for Discovering Knowledge from Images: Pitfalls and Best Practices

Lecturer: Marcus BLOICE <expertise>

Abstract: Neural networks have been shown to be adept at image analysis and image classification. Deep layered neural networks especially so. However, deep learning requires two things in order to work proficiently: large amounts of data and lots of processing power. In this talk both aspects are covered, allowing you to maximise the potential of deep learning. Firstly, we will learn how the computational power of GPUs can be used to speed up learning by orders of magnitude, making it possible to learn from very large datasets on commodity hardware. Thanks to software such as Theano, Caffe, and Pylearn2, the GPU can be leveraged without needing to be an expert in parallel programming. This talk will discuss how. Secondly, data preprocessing, data augmentation, and artificial data generation are discussed. These methods allow you to ensure you are making the most of the data you possess, by expanding your dataset and preparing your data properly before analysis. This means discussing best practices in data preparation, using methods such as histogram equalisation, contrast stretching or normalisation, and discussing artificial data generation in detail. The tools you require to do so are described, using multi-platform software that is freely available. Finally, the talk will touch on hyper-parameters and the best practices and pitfalls of hyper-parameter choice when training deep neural networks.

Title: Pitfalls for applying Machine Learning in HCI-KDD: Things to be aware of and how to avoid them

Lecturer: Christof STOCKER <expertise>

Abstract: When dealing with big and unstructured data sets, we often try to be creative and to experiment with a number of different approaches for the purpose of knowledge discovery. This can lead to new insights and even spark novel ideas. However, ignorant application of algorithms to unknown data is dangerous and can lead to false conclusions – with high statistical significance. In finite data sets, structure can emerge from sheer randomness. Furthermore, hidden variables can lead to significant correlations that in turn might result in wrong conclusions. Beyond this, data science as a discipline has developed into a complex area in which mistakes can occur with ease and even lead experienced scientists astray. In this talk we will investigate these pitfalls together on simple examples and discuss how we can address these concerns with manageable effort.