Our paper on “measuring the quality of explanations” just exceeded 5,000 downloads on the Springer site:

https://link.springer.com/article/10.1007/s13218-020-00636-z

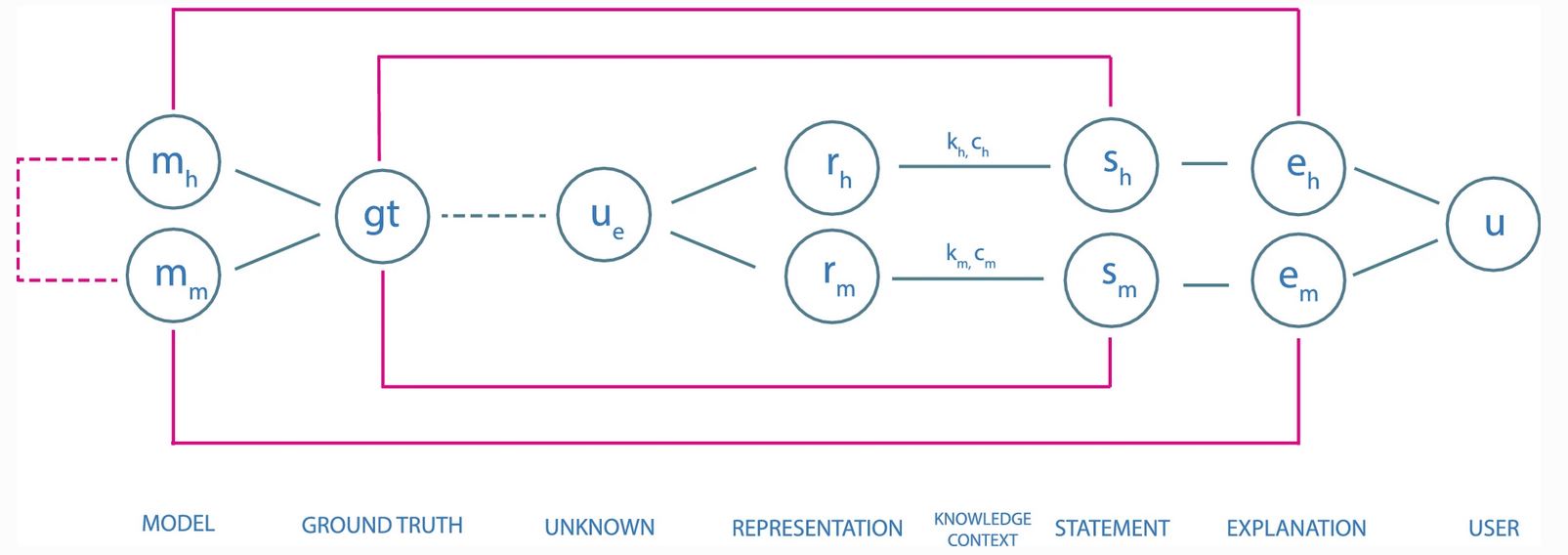

In order to build effective and efficient interactive human–AI interfaces we have to deal with the question of how to evaluate the quality of explanations given by an explainable AI system. In this paper we introduce our System Causability Scale to measure the quality of explanations. It is based on our notion of Causability (Holzinger et al. in Wiley Interdiscip Rev Data Min Knowl Discov 9(4), 2019) combined with concepts adapted from a widely-accepted usability scale, the system usability scale (SUS).

Andreas Holzinger, Andre Carrington & Heimo Müller (2020). Measuring the Quality of Explanations: The System Causability Scale (SCS). Comparing Human and Machine Explanations. KI – Künstliche Intelligenz (German Journal of Artificial intelligence), Special Issue on Interactive Machine Learning, Edited by Kristian Kersting, TU Darmstadt, 34, (2), 193-198, doi:10.1007/s13218-020-00636-z.