Explainable Artificial Intelligence (XAI) Methods – A Brief Overview

by

Andreas Holzinger, Anna Saranti, Christoph Molnar, Przemyslaw Biecek & Wojciech Samek

FULL OPEN ACCESS – download the paper for free open access directly from the Springer link:

https://rd.springer.com/book/10.1007/978-3-031-04083-2

Explainable Artificial Intelligence (explainable-AI, xAI) is meanwhile an established field. There is a vibrant explainable-AI community. This community has developed a variety of very successful approaches. The goal is to explain and interpret predictions of complex machine learning models such as deep neural networks.

The motivation for explainable-AI lies in the great advances in statistical machine learning methods. Particularly, deep learning is very successful. Deep Learning re-popularised Artificial Intelligence and outperforms humans in many domains. However, their full potential is limited, because there are difficulties in generating underlying explanatory structures, and they lack an explicit declarative knowledge representation.

Explainable-AI is helpful and desirable in many domains. It is imperative when it comes to applications that affect human life. The increasing legal and privacy issues [1] are the main motivators to understand and track machine decision-making processes. Transparent algorithms could strengthen the trustworthiness of AI [2] and thus increase the acceptance of AI solutions in general [3].

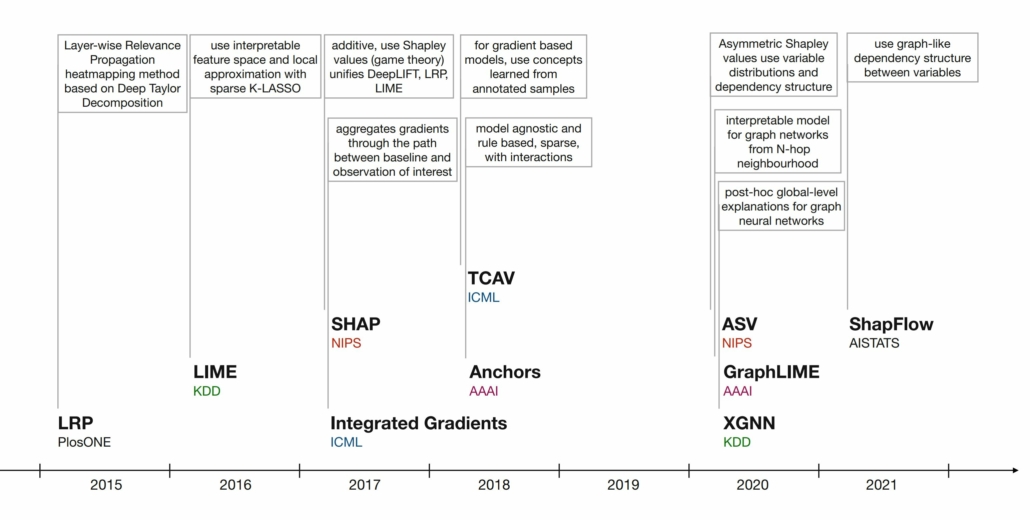

A chronology of the evolution of the XAI methods as described in our paper. It is interesting to note that at the beginning of this young discipline, methods were based on model analysis based on the model itself or on sample data. Subsequent methods used more and more information about the structure and relationships between the analyzed variables. In the future, much more emphasis will be placed on causal relationships and measuring how well an explanation is understood by humans to foster trust [4]. A key challenge here is how we can have a human interact with these AI algorithms – that is, how we can combine artificial intelligence with natural intelligence [5].

In this new article, we briefly introduce a few selected methods and discuss them in a short, clear and concise way. The goal of this article is to give beginners, especially application engineers and data scientists, a quick overview of the state of the art in this current topic. The following 17 methods are covered in this chapter:

LIME,

Anchors,

GraphLIME,

LRP,

DTD,

PDA,

TCAV,

XGNN,

SHAP,

ASV,

Break-Down,

Shapley Flow,

Textual Explanations of Visual Models,

Integrated Gradients,

Causal Models,

Meaningful Perturbations, and

X-NeSyL.

This chapter is part of our new XXAI – Beyond Explainable AI Volume:

https://human-centered.ai/springer-lnai-xxai

[1] see e.g. https://doi.org/10.1145/3458652

[2] see e.g. https://doi.org/10.1016/j.inffus.2021.10.007

[3] see e.g. https://doi.org/10.1007/978-3-031-04083-2_18

[4] see e.g. https://rdcu.be/cN2sd

[5] see e.g. https://doi.org/10.1109/MC.2021.3092610