ABSTRACT

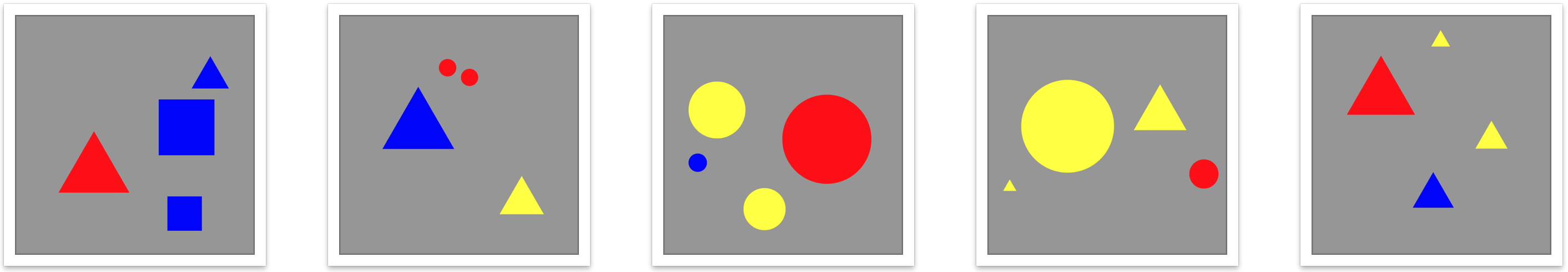

KANDINSKYPatterns (yes, named after the famous Russian artist Wassily Kandinsky) are mathematically describable, simple, self-contained, hence controllable test data sets for the development, validation and training of explainability in artificial intelligence (AI) and machine learning (ML). Whilst our KANDINSKY Patterns have these computationally manageable properties, they are at the same time easily distinguishable from human observers. Consequently, controlled patterns can be described by both humans and algorithms.

We define a KANDINSKY Pattern as a set of KANDINSKY Figures, where for each figure an “infallible authority” (ground truth) defines that this figure belongs to the KANDINSKY Pattern. With this simple principle we build training and validation data sets for automatic interpretability and context learning.

In the paper [1] we describe the underlying idea of KANDINSKY Patterns and provide a Github repository to invite the international machine learning research community to a challenge to experiment with our KANDINSKY Patterns to expand and thus make progress to the field of explainable AI, interpretable ML and ultimately to contribute to the upcoming field of causability.

In the second paper [2] we propose to use our KANDINSKY Patterns as an (intelligence) IQ-Test for machines, similarly as tests for testing human intelligence. Originally, intelligence tests are tools for cognitive performance diagnostics to provide quantitative measures for the “intelligence” of a person, which is called the intelligence quotient (IQ). Intelligence tests are therefore colloquially called IQ-tests.

In the third paper [3] we give an overview about the current state of the art, where we discuss existing diagnostic tests and test data sets such as CLEVR, CLEVERER, CLOSURE, CURI, Bongard-LOGO, V-PROM.

Very important related concept learning benchmark datasets include:

Parts of this work have received funding from the Austrian Research Promotion Agency (FFG) under grant agreement No. 879881 (EMPAIA) and by the Austrian Science Fund (FWF), Project: P-32554 explainable Artificial Intelligence.