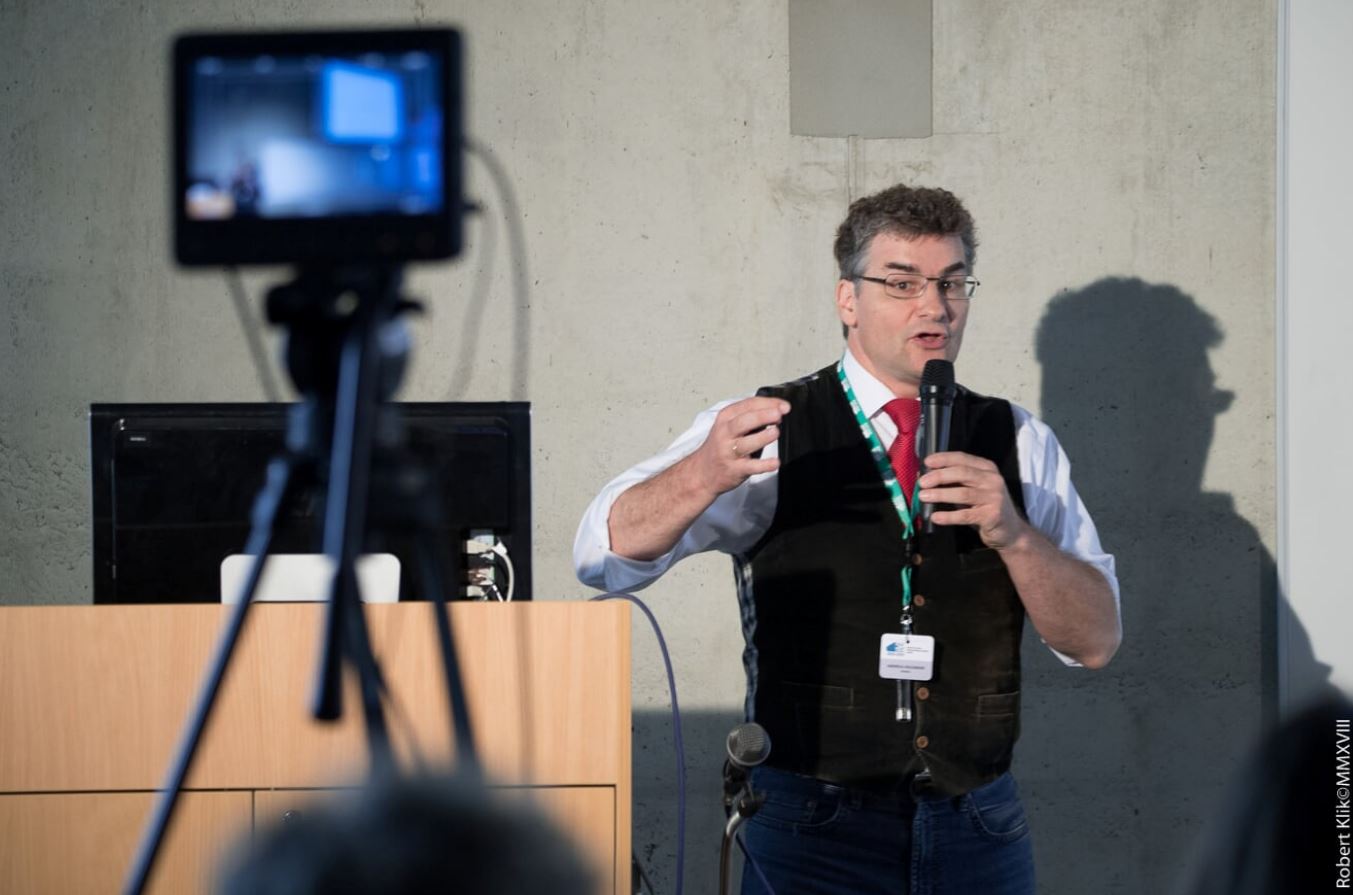

IEEE DISA 2018 in Kosice

The IEEE DISA 2018 World Symposium on Digital Intelligence for Systems and Machines was organized by the TU Kosice:

Here you can download my keynote presentation (see title and abstract below)

a) 4 Slides per page (pdf, 5,280 kB):

HOLZINGER-Kosice-ex-AI-DISA-2018-30Minutes-4×4

b) 1 slide per page (pdf, 8,198 kB):

HOLZINGER-Kosice-ex-AI-DISA-2018-30Minutes

c) and here the link to the paper (IEEE Xplore)

From Machine Learning to Explainable AI

d) and here the link to the video recording

https://archive.tp.cvtisr.sk/archive.php?tag=disa2018##videoplayer

Title: Explainable AI: Augmenting Human Intelligence with Artificial Intelligence and v.v

Abstract: Explainable AI is not a new field. Rather, the problem of explainability is as old as AI itself. While rule‐based approaches of early AI are comprehensible “glass‐box” approaches at least in narrow domains, their weakness was in dealing with uncertainties of the real world. The introduction of probabilistic learning methods has made AI increasingly successful. Meanwhile deep learning approaches even exceed human performance in particular tasks. However, such approaches are becoming increasingly opaque, and even if we understand the underlying mathematical principles of such models they lack still explicit declarative knowledge. For example, words are mapped to high‐dimensional vectors, making them unintelligible to humans. What we need in the future are context‐adaptive procedures, i.e. systems that construct contextual explanatory models for classes of real‐world phenomena.

Maybe one step is in linking probabilistic learning methods with large knowledge representations (ontologies), thus allowing to understand how a machine decision has been reached, making results re‐traceable, explainable and comprehensible on demand ‐ the goal of explainable AI.