We just had our keynote by Randy GOEBEL from the Alberta Machine Intelligence Institute (Amii), working on enhnancing understanding and innovation in artificial intelligence:

https://cd-make.net/keynote-speaker-randy-goebel

You can see his slides with friendly permission of Randy here (pdf, 2,680 kB):

https://human-centered.ai/wordpress/wp-content/uploads/2018/08/Goebel.XAI_.CD-MAKE.Aug30.2018.pdf

Here you can read a preprint of our joint paper of our explainable ai session (pdf, 835 kB):

GOEBEL et al (2018) Explainable-AI-the-new-42

Randy Goebel, Ajay Chander, Katharina Holzinger, Freddy Lecue, Zeynep Akata, Simone Stumpf, Peter Kieseberg & Andreas Holzinger. Explainable AI: the new 42? Springer Lecture Notes in Computer Science LNCS 11015, 2018 Cham. Springer, 295-303, doi:10.1007/978-3-319-99740-7_21.

Here is the link to our session homepage:

https://cd-make.net/special-sessions/make-explainable-ai/

amii is part of the Pan-Canadian AI Strategy, and conducts leading-edge research to push the bounds of academic knowledge, and forging business collaborations both locally and internationally to create innovative, adaptive solutions to the toughest problems facing Alberta and the world in Artificial Intelligence/Machine Learning.

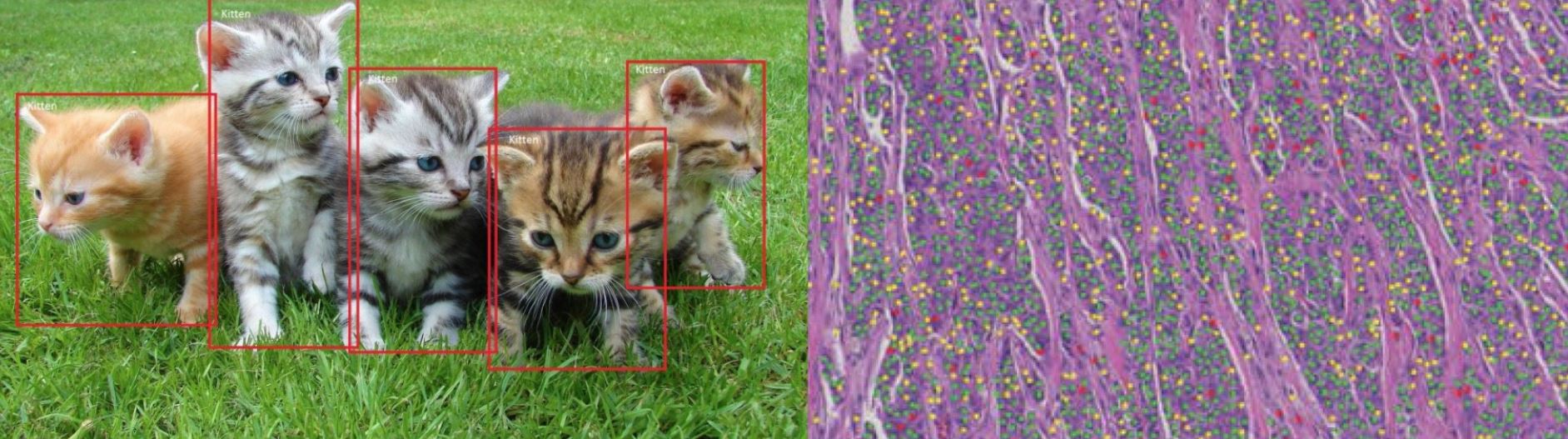

Here some snapshots:

R.G. (Randy) Goebel is Professor of Computing Science at the University of Alberta, in Edmonton, Alberta, Canada, and concurrently holds the positions of Associate Vice President Research, and Associate Vice President Academic. He is also co-founder and principle investigator in the Alberta Innovates Centre for Machine Learning. He holds B.Sc., M.Sc. and Ph.D. degrees in computer science from the University of Regina, Alberta, and British Columbia, and has held faculty appointments at the University of Waterloo, University of Tokyo, Multimedia University (Malaysia), Hokkaido University, and has worked at a variety of research institutes around the world, including DFKI (Germany), NICTA (Australia), and NII (Tokyo), was most recently Chief Scientist at Alberta Innovates Technology Futures. His research interests include applications of machine learning to systems biology, visualization, and web mining, as well as work on natural language processing, web semantics, and belief revision. He has experience working on industrial research projects in scheduling, optimization, and natural language technology applications.

Here is Randy’s homepage at the University of Alberta:

https://www.ualberta.ca/science/about-us/contact-us/faculty-directory/randy-goebel

The University of Alberta at Edmonton hosts approximately 39k students from all around the world and is among the five top universities in Canada and togehter with Toronto and Montreal THE center in Artificial Intelligence and Machine Learning.