Welcome to the Seminar Explainable AI [Syllabus, pdf, 85kB]

Here you find our open access overview paper for students:

Here you find our open access overview paper for students:

https://doi.org/10.1007/978-3-031-04083-2_2

Artificial Intelligence (AI) and Machine Learning (ML) allow problem solving automatically without any human intervention and particularly Deep Learning (DL) is very successful. In the medical field, however, it is necessary for a medical doctor to be able to understand why an algorithm has come to a certain result. Consequently, the field of Explainable AI (xAI) is gaining interest. xAI studies transparency and traceability of opaque AI/ML and there are already a variety of methods. For example with layer-wise relevance propagation relevant parts of inputs to, and representations in, a neural network which caused a result, can be highlighted. This is a first important step to ensure that end users assume responsibility for AI-assisted decision making. Interactive ML (iML) adds the component of human expertise to these processes by enabling them to re-enact and retrace the results (e.g. plausibility checks). Summarizing, Explainable AI deals with the implementation of transparency and traceability of statistical black‐box machine learning methods, particularly deep learning (DL), however, in the medical domain there is a need to go beyond explainable AI. To reach a level of explainable medicine we need causability. In the same way that usability encompasses measurements for the quality of use, causability encompasses measurements for the quality of explanations.

This course consists of 10 modules. Module 0 is voluntary and for students who need a refresher on probability and measuring information. Module 9 on “Ethical, Legal and Social Issues of Explainable AI” is mandatory (please watch this video before taking class). The remaining Modules 1 to 8 are adaptable to the individual previous knowledge, needs and requirements of the class. It deals mainly with methodological aspects of explainable AI in a research-based teaching (RBT) style, we speak Python and experiments will be done with the Kandinsky-Patterns, our “Swiss-Knife” for the study of explainable ai (please watch this video for an easy introduction).

https://github.com/human-centered-ai-lab/cla-Seminar-explainable-AI-2019

MOTIVATION for this course

This course is an extension of the last years “Methods of explainable AI”, which was a natural offspring of the interactive Machine Learning (iML) courses and the decision making (see courses held over the last years). Today the most successful AI/machine learning models, e.g. deep learning (see the difference AI-ML-DL) are often considered to be “black-boxes” making it difficult to re-enact and to answer the question of why a certain machine decision has been reached. A general serious drawback is that statistical learning models have no explicit declarative knowledge representation. That means such models have enormous difficulty in generating underlying explanatory structures. This limits their ability to understand the context. Here the human-in-the-loop is exceptional good and as our goal is to augment the human intelligence with artificial intelligence we should rather speak of having a computer-in-the-loop. This is not necessary in some application domains (e.g. autonomous vehicles), but it is essential in our application domain, which is the medical domain (see our digital pathology project). A medical doctor is required on demand to retrace a result in an human understandable way. This calls not only for explainable models, but also for explanation interfaces (see AK HCI course). Interestingly, early AI systems (rule based systems) were explainiable to a certain extent within a well-defined problem space. Therefore this course will also provide a background on decision support systems from the early 1970ies (e.g. MYCIN, or GAMUTS of Radiology). In the class of 2019 we will focus even more on making inferences from observational data and reasoning under uncertainty which quickly bring us into the field of causality. Because in the biomedical domain (see e.g. our digital pathology project) we need to discover and understand unexpected interesting and relevant patterns in data to gain knowledge or for troubleshooting.

GOAL of this course

This graduate course follows a research-based teaching (RBT) approach and provides an overview of selected current state-of-the-art methods on making AI transparent re-traceable, re-enactable, understandable, consequently explainable. Note: We speak Python [here a source for beginners], and here a good intro to [Colab], or read or [tutorial paper]

BACKGROUND

Explainability/Interpretability is motivated due to lacking transparency of so-called black-box approaches, which do not foster trust [6] and acceptance of AI generally and ML specifically. Rising legal and privacy aspects, e.g. with the new European General Data Protection Regulations (GDPR, which is now in effect since May 2018) will make black-box approaches difficult to use in Business, because they often are not able to explain why a machine decision has been made (see explainable AI).

Consequently, the field of Explainable AI is recently gaining international awareness and interest, because raising legal, ethical, and social aspects make it mandatory to enable – on request – a human to understand and to explain why a machine decision has been made [see Wikipedia on Explainable Artificial Intelligence]. Note: that does not mean that it is always necessary to explain everything and all – but to be able to explain it if necessary – e.g. for general understanding, for teaching, for learning, for research – or in court – or even on demand by a citizen – right of explanabiltiy.

HINT

If you need a statistics/probability refresher go to the Mini-Course MAKE-Decisions and review the statistics/probability primer: https://human-centered.ai/mini-course-make-decision-support/

If you are particularly interested visit the course machine learning for health informatics:

https://human-centered.ai/machine-learning-for-health-informatics-class-2020/

Module 00 – Primer on Probability, Information and Learning from Data (optional)

Keywords: probability, data, information, entropy measures

Learning goal: refresher of the most essential maths background needed

Topic 00: Mathematical Notations

Topic 01: Probability Distribution and Probability Density

Topic 02: Expectation and Expected Utility Theory

Topic 03: Joint Probability and Conditional Probability

Topic 04: Independent and Identically Distributed Data IIDD *)

Topic 05: Bayes and Laplace

Topic 06: Measuring Information: Kullback-Leibler Divergence and Entropy

Lecture slides (3,519 kB): 00-PRIMER-probability-and-information-2019

Recommened Reading for students:

[1] David J.C. Mackay 2003. Information theory, inference and learning algorithms, Boston (MA), Cambridge University Press. Online available: https://www.inference.org.uk/itprnn/book.html

Slides online available: https://www.inference.org.uk/itprnn/Slides.shtml

[2] An excellent resource is: David Poole, Alan Mackworth & Randy Goebel 1998. Computational intelligence: a logical approach, New York, Oxford University Press, where there is a new edition available along with excellent student resources available online:

https://artint.info/2e/html/ArtInt2e.html

*) these are those we never have in the medical domain

Module 01 – Introduction: From automatic ML to interactive ML and xAI

Keywords: HCI-KDD approach, integrative AI/ML, complexity, automatic ML, interactive ML, explainable AI

Learning goal: students get a broad overview on the HCI-KDD approach (combining methods from Cognitive Science/Psychology and Computer Science/Artificial Intelligence and the differences between automatic/autonomous machine learning (aML) and interactive machine learning (iML).

Topic 00: Reflection – follow up from Module 0 – dealing with probability and information

Topic 01: The HCI-KDD approach: Towards an human-centred ai ecosystem [1]

Topic 02: The complexity of the application area health informatics [2]

Topic 03: Probabilistic information

Topic 04: Automatic ML (aML)

Topic 05: Interactive ML (iML)

Topic 06: From interactive ML to explainable AI (ex-AI)

Lecture slides 2×2 (26,755 kB): contact lecturer for slide deck

[1] Andreas Holzinger (2013). Human–Computer Interaction and Knowledge Discovery (HCI-KDD): What is the benefit of bringing those two fields to work together? In: Cuzzocrea, Alfredo, Kittl, Christian, Simos, Dimitris E., Weippl, Edgar & Xu, Lida (eds.) Multidisciplinary Research and Practice for Information Systems, Springer Lecture Notes in Computer Science LNCS 8127. Heidelberg, Berlin, New York: Springer, pp. 319-328, doi:10.1007/978-3-642-40511-2_22.

Online available in the IFIP Hyper Articles en Ligne (HAL), INRIA: https://hal.archives-ouvertes.fr/hal-01506781/document

[2] Andreas Holzinger, Matthias Dehmer & Igor Jurisica 2014. Knowledge Discovery and interactive Data Mining in Bioinformatics – State-of-the-Art, future challenges and research directions. Springer/Nature BMC Bioinformatics, 15, (S6), I1, doi:10.1186/1471-2105-15-S6-I1. https://bmcbioinformatics.biomedcentral.com/articles/10.1186/1471-2105-15-S6-I1

Module 02 – Decision Making and Decision Support: from the underlying principles to understanding the context

Keywords: information, decision, decision making, medical action

Learning goal: If we (want to) deal with “human-level AI” then we should have at least a basic understanding of human intelligence, so here the students get a rouugh overview on human cognitive aspects.

Topic 00: Reflection – follow up from Module 1 – introduction

Topic 01: Medical action = Decision making

Topic 02: The underlying principles of intelligence and cognition

Topic 03: Human vs. Computer

Topic 04: Human Information Processing

Topic 05: Probabilistic decision theory

Topic 06: The problem of understanding context

Lecture slides 2×2 (31,120 kB): contact lecturer for slide set

Module 03 – From Decision Support Systems to Explainable AI and some principles of Causality

Keywords: Decision Support Systems, Recommender Sytems, Artificial Advice Giver

Learning Goal: Students get a quick intro into the history of decision support systems which is a very good overview on classical AI, some examples from the medical domain which brings us to principles of causality and causal inference

Topic 00: Reflection – follow up from Module 02

Topic 01: Decision Support Systems (DSS)

Topic 02: Computers help making better decisions?

Topic 03: History of DSS = History of AI

Topic 04: Example: Towards Precision Medicine

Topic 05: Example: Case based Reasoning (CBR)

Topic 06: A few principles of causality

Lecture slides 2×2 (27,177 kB): contact lecturer for slide set

Module 04 – Top-Level overview of explanation Methodologies: from ante-hoc to post-hoc explainability

Keywords: Global explainability, local explainability, ante-hoc, post-hoc

Learning goal: In the fourth module the students get a coarse overview of explanation methods so that the students get at first a bird-eye view on top-level, before going into details.

Topic 00: Reflection – follow up from Module 3

Topic 01: Basics, Definitions (Explainabiliy, Interpretability, …)

Topic 02: Please note: xAI is not new!

Topic 03: Examples for Ante-hoc models (using explainable models, interpretable machine learning)

Topic 04: Examples for Post-hoc models (making “black-box” models interpretable)

Topic 05: Explanation interfaces (future human-AI interaction)

Topic 06: A few words on metrics of xAI (measuring causability**)

Lecture slides (pdf, 4,758kB): 04-Explainable-AI-Global-Local-Antehoc-Posthoc-Overview

*) post-hoc = select a model and develop a technique to make it transparent; ante-hoc = select a model that is transparent and optimize it to your problem

**) see the recent work on Measuring the Quality of Explanations: The System Causability Scale (SCS)

Comparing Human and Machine Explanations https://link.springer.com/article/10.1007/s13218-020-00636-z

Module 05 – Selected Methods of explainable-AI Part I

Keywords: LIME, BETA, LRP, Deep Taylor Decomposition, Prediction Difference Analysis

Learning goal: Here we go deeper into details and discuss some state-of-the-art exAI methods.

Topic 00: Reflection – follow up from Module 4

Topic 01: LIME (Local Interpretable Model Agnostic Explanations) – Ribeiro et al. (2016) [1]

Topic 02: BETA (Black Box Explanation through Transparent Approximation) – Lakkaraju et al. (2017) [2]

Topic 03: LRP (Layer-wise Relevance Propagation) – Bach et al. (2015) [3]

Topic 04: Deep Taylor Decomposition – Montavon et al. (2017) [4]

Topic 05: Prediction Difference Analysis – Zintgraf et al. (2017) [5]

Lecture slides (pdf, 4243 kB): 05-Selected-Methods-exAI-Part-1-LIME-BETA-LRP-DTD-PDA

Reading for Students:

[1] Marco Tulio Ribeiro, Sameer Singh & Carlos Guestrin 2016. Model-Agnostic Interpretability of Machine Learning. arXiv:1606.05386.

[2] Himabindu Lakkaraju, Ece Kamar, Rich Caruana & Jure Leskovec 2017. Interpretable and Explorable Approximations of Black Box Models. arXiv:1707.01154.

[3] Sebastian Bach, Alexander Binder, Grégoire Montavon, Frederick Klauschen, Klaus-Robert Müller & Wojciech Samek 2015. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS one, 10, (7), e0130140, doi:10.1371/journal.pone.0130140. NOTE: Sebastian BACH is now Sebastian LAPUSCHKIN

[4] Grégoire Montavon, Wojciech Samek & Klaus-Robert Müller 2017. Methods for interpreting and understanding deep neural networks. arXiv:1706.07979.

[5] Luisa M. Zintgraf, Taco S. Cohen, Tameem Adel & Max Welling 2017. Visualizing deep neural network decisions: Prediction difference analysis. arXiv:1702.04595.

[6] The Explainable AI demos of the Fraunhofer Heinrich Hertz Institute in Berlin are an ideal showcase for the state-of-the art and demonstrate what Layer-Rise Relevance Propagation can do and where the current limitations are: https://lrpserver.hhi.fraunhofer.de/

Additional methods include:

L-BFGS Limited Memory Broyden-Fletcher-Goldfarb-Shanno, see: Dong C. Liu & Jorge Nocedal 1989. On the limited memory BFGS method for large scale optimization. Mathematical programming, 45, (1-3), 503-528, doi:10.1007/BF01589116.

FGSM (Fast Gradient Sign Method, see Ian J. Goodfellow, Jonathon Shlens & Christian Szegedy 2014. Explaining and harnessing adversarial examples. arXiv:1412.6572.

JBSA (Jacobian Based Saliency Approach), see Daniel Jakubovitz & Raja Giryes. Improving dnn robustness to adversarial attacks using jacobian regularization. Proceedings of the European Conference on Computer Vision (ECCV), 2018. 514-529. ECCV 2018

Module 06 – Selected Methods of explainable-AI Part II

Keywords: Deconvolution, Inverting, Guided Backpropagation, Deep Generator Networks, TCAV

Learning goal: Students learn the principles of heatmapping and some approaches towards visual concept learning [6]

Topic 00: Reflection – follow up from Module 5

Topic 01: Visualizing Convolutional Neural Nets with Deconvolution – Zeiler & Fergus (2014) [1]

Topic 02: Inverting Convolutional Neural Networks – Mahendran & Vedaldi (2015) [2]

Topic 03: Guided Backpropagation – Springenberg et al. (2015) [3]

Topic 04: Deep Generator Networks – Nguyen et al. (2016) [4]

Topic 05: Testing with Concept Activation Vectors (TCAV) – Kim et al. (2018) [5]

Lecture slides (pdf, 2,676 kB) 06-Selected-Methods-exAI-Part2-Deconv-inv-guided-back-deep-gen-TCAV

Reading for Students:

[1] Matthew D. Zeiler & Rob Fergus 2014. Visualizing and understanding convolutional networks. In: Fleet, D., Pajdla, T., Schiele, B. & Tuytelaars, T. (eds.) ECCV, Lecture Notes in Computer Science LNCS 8689. Cham: Springer, pp. 818-833, doi:10.1007/978-3-319-10590-1_53.

[2] Aravindh Mahendran & Andrea Vedaldi. Understanding deep image representations by inverting them. Proceedings of the IEEE conference on computer vision and pattern recognition, 2015. 5188-5196, doi:10.1109/CVPR.2015.7299155.

[3] Jost Tobias Springenberg, Alexey Dosovitskiy, Thomas Brox & Martin Riedmiller 2014. Striving for simplicity: The all convolutional net. arXiv:1412.6806.

[4] Anh Nguyen, Alexey Dosovitskiy, Jason Yosinski, Thomas Brox & Jeff Clune. Synthesizing the preferred inputs for neurons in neural networks via deep generator networks. Advances in Neural Information Processing Systems (NIPS 2016), 2016 Barcelona. 3387-3395. Read the reviews: https://media.nips.cc/nipsbooks/nipspapers/paper_files/nips29/reviews/1685.html

[5] Been Kim, Martin Wattenberg, Justin Gilmer, Carrie Cai, James Wexler & Fernanda Viegas. Interpretability beyond feature attribution: Quantitative testing with concept activation vectors (tcav). International Conference on Machine Learning, ICML 2018. 2673-2682, Stockholm.

[6] Andreas Holzinger, Michael Kickmeier-Rust & Heimo Müller 2019. KANDINSKY Patterns as IQ-Test for Machine Learning. International Cross-Domain Conference for Machine Learning and Knowledge Extraction, Lecture Notes in Computer Science LNCS 11713. Cham: Springer, pp. 1-14, doi: 10.1007/978-3-030-29726-8_1. More info see: https://human-centered.ai/project/kandinsky-patterns/

Module 07 – Selected Methods of explainable-AI Part III

Keywords: Model understanding, Cell state analysis, fitted additive, iML

Learning goal: Here students are motivated for understanding the model, particularly with feature visualization, contrasted to deep visualization, which will be further motivated by some hands-on Python practicals.

Topic 00: Reflection – follow up from Module 06

Topic 01: Understanding the Model: Feature Visualization – Erhan et al. (2009) [1]

Topic 02: Understanding the Model: Deep Visualization – Yoshynski et al (2015) [2]

Topic 03: Recursive Neural Networks cell state analysis – Karpathy et al. (2015) [3]

Topic 04: Fitted Additive – Caruana (2015) [4]

Topic 05: Interactive Machine Learning with the human-in-the-loop – Holzinger et al. (2017) [5]

Lecture slides (pdf, 4,690 kB): 07-Selected-Methods-exAI-Part3-feature-vis-deep-fitted-additive-iML

Reading for Students:

[1] Dumitru Erhan, Yoshua Bengio, Aaron Courville & Pascal Vincent 2009. Visualizing higher-layer features of a deep network. Technical Report 1341, Departement d’Informatique et Recherche Operationnelle, University of Montreal. [pdf available here]

[2] Jason Yosinski, Jeff Clune, Anh Nguyen, Thomas Fuchs & Hod Lipson 2015. Understanding neural networks through deep visualization. arXiv:1506.06579. Please watch this video: https://www.youtube.com/watch?v=AgkfIQ4IGaM

You can find the code here: https://yosinski.com/deepvis (cool stuff!)

[3] Andrej Karpathy, Justin Johnson & Li Fei-Fei 2015. Visualizing and understanding recurrent networks. arXiv:1506.02078. Code available here: https://github.com/karpathy/char-rnn (awesome!)

[4] Rich Caruana, Yin Lou, Johannes Gehrke, Paul Koch, Marc Sturm & Noemie Elhadad. Intelligible models for healthcare: Predicting pneumonia risk and hospital 30-day readmission. 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’15), 2015 Sydney. ACM, 1721-1730, doi:10.1145/2783258.2788613.

[5] Andreas Holzinger, et al. 2018. Interactive machine learning: experimental evidence for the human in the algorithmic loop. Applied Intelligence, doi:10.1007/s10489-018-1361-5.

Module 08 – Selected Methods of explainable-AI Part IV

Keywords: Sensitivity, Gradients, DeepLIFT, Grad-CAM

Learning goal: Students get an overview on various approaches of Sensitivity Analysis and Gradients, with a general overview on some very specific methods, e.g., DeepLIFT and Grad-CAM.

Topic 00: Reflection – follow up from Module 07

Topic 01: Sensitivity Analysis I Simonyan et al. (2013) [1], Baehrens et al (2009) [2]

Topic 02: Gradients General overview [3]

Topic 03: Gradients: DeepLIFT Shrikumar et al. (2015) [4]

Topic 04: Gradients: Grad-CAM Selvaraju et al. (2016) [5]

Topic 05: Gradients: Integrated Gradient Sundararajan et al. (2017) [6]

and please read the interesting paper on “Gradient vs. Decomposition” by Montavon et al. (2018) [7]

Lecture slides (pdf, 2755 kB) : 08-Selected-Methods-exAI-Part4-Gradients-Sensitivity

Reading for Students:

[1] Karen Simonyan, Andrea Vedaldi & Andrew Zisserman 2013. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv:1312.6034.

[2] David Baehrens, Timon Schroeter, Stefan Harmeling, Motoaki Kawanabe, Katja Hansen & Klaus-Robert Mãžller 2010. How to explain individual classification decisions. Journal of Machine Learning Research, 11, (Jun), 1803-1831.

http://www.jmlr.org/papers/v11/baehrens10a.html

[4] Avanti Shrikumar, Peyton Greenside & Anshul Kundaje 2017. Learning important features through propagating activation differences. arXiv:1704.02685.

https://github.com/kundajelab/deeplift

Youtube Intro: https://www.youtube.com/watch?v=v8cxYjNZAXc&list=PLJLjQOkqSRTP3cLB2cOOi_bQFw6KPGKML

[5] Ramprasaath R. Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh & Dhruv Batra. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. ICCV, 2017. 618-626.

Module 09 (mandatory) – Ethical, Legal and Social Issues of Explainable AI

Keywords: AI in … Law, Ethics, Society, Governance; Compliance, Fairness, Accountability, Transparency, Ethics-by-design, Bias, Adversarial Examples

Learning goal: The aim of this mandatory module is to make students of software engineering aware of current sociological, economic and legal aspects of current AI. Please watch this video first to be aware of the unavoidable danger of bias in machine learning: https://www.coursera.org/lecture/google-machine-learning/machine-learning-and-human-bias-ZxQaP

Topic 01: Repetition: From Causality to ethical responsibility

Topic 02: Automatic-Automated-Autonomous: Human-in-the-Loop, Computer-in-the-Loop -> Human-in-control

Topic 03: AI ethics: Legal accountability and moral dilemmas [1]

Topic 04: AI ethcis: Algorithms and the proof of explanations (truth vs. trust) [2]

Topic 05: Responsible AI [3] and examples from computational sociology (fraud, bias, manipulation, adversarial examples, etc.)

Lecture slides (2,820 kB): 09-Ethical-Legal-Social-Issues-Explainable-AI

Reading for students:

[0] A very valuable ressource can be found here in the future of privacy forum:

https://fpf.org/artificial-intelligence-and-machine-learning-ethics-governance-and-compliance-resources/

[1] Neil M. Richards & William D. Smart 2016. How should the law think about robots? In: Calo, Ryan, Froomkin, A. Michael & Kerr, Ian (eds.) Robot law. Cheltenham: Edward Elgar Publishing, pp. 1-20, doi:10.4337/9781783476732. [online free available]

[2] Simpson’s reversal paradox: https://www.youtube.com/watch?v=ebEkn-BiW5k

Martin Gardner 1976. Fabric of inductive logic, and some probability paradoxes. Scientific American, 234, (3), 119-124, doi:10.1038/scientificamerican0376-119. This has been discussed in an excellent manner by Judea Pearl, see: Judea Pearl 2014. Comment: understanding Simpson’s paradox. The American Statistician, 68, (1), 8-13, doi:10.1080/00031305.2014.876829.

[3] Ronald Stamper 1988. Pathologies of AI: Responsible use of artificial intelligence in professional work. AI & society, 2, (1), 3-16, doi: 10.1007/BF01891439.

[4] IEEE Global Initiative on Ethics of Autonmous and Intelligent Systems Ethics-in-Action https://ethicsinaction.ieee.org/

[5] Ethics Guidelines from the High-Level Expert Group on AI presented on April, 8, 2019 https://ec.europa.eu/digital-single-market/en/news/ethics-guidelines-trustworthy-ai

[6] ISO/IEC JTC 1/SC 42 Artificial Intelligence https://www.iso.org/committee/6794475.html

(wG 3 is working on trustworthiness, i.e. on exploring ways on how to establish trust in AI/ML system whilst at the same time minimizing threats and risks; they have identified transparency and robustness as key for ethical and societal responsible future AI systems.

[7] A nice and easy introduction to the problem of bias and fairness in machien learning: https://www.youtube.com/watch?v=gV0_raKR2UQ

[8] The psychological tendency of favoring one thing or opinion over another is reflected in every aspect of our lives, creating both latent and overt biases toward everything we see, hear, and do; therefore it is of utmost importance that students are aware that bias exists always; for example, most mature societies raise awareness of social bias through affrmative-action programs, and, while awareness alone does not completely alleviate the problem, it helps guide us toward a solution, see: https://dl.acm.org/doi/10.1145/3209581

[9] For the final class-discussion please

a) watch the talk by Kate Crawford on “the trouble of bias” at NIPS 2017: https://www.youtube.com/watch?v=fMym_BKWQzk

b) read carefully through the “Principles for Accountable Algorithms and a Social Impact Statement for Algorithms”, see: https://perma.cc/8F5U-C9JJ

[10] 2011 Federal Reserver System SR 11.7: Guidance on Model Risk Management: https://www.federalreserve.gov/supervisionreg/srletters/sr1107.htm

[11] Benefits and risks of Artificial Intelligence: https://futureoflife.org/background/benefits-risks-of-artificial-intelligence

Extra Module – Causality Learning and Causality for Decision Support

Keywords: Causality, Graphical Causal Models, Bayesian Networks, Directly Acyclic Graphs

Topic 01: Making inferences from observational and unobservational variables and reasoning under uncertainty [1]

Topic 02: Factuals, Counterfactuals [2], Counterfactual Machine Learning and Causal Models [3]

Topic 03: Probabilistic Causality Examples

Topic 04: Causality in time series (Granger Causality)

Topic 05: Psychology of causation

Topic 06: Causal Inference in Machine Learning

Lecture slides 2×2 (15,544 kB): contact lecturer for slide deck

Reading for students:

[1] Judea Pearl 1988. Evidential reasoning under uncertainty. In: Shrobe, Howard E. (ed.) Exploring artificial intelligence. San Mateo (CA): Morgan Kaufmann, pp. 381-418.

[2] Matt J. Kusner, Joshua Loftus, Chris Russell & Ricardo Silva. Counterfactual fairness. In: Guyon, Isabelle, Luxburg, Ulrike Von, Bengio, Samy, Wallach, Hanna, Fergus, Rob & Vishwanathan, S.V.N., eds. Advances in Neural Information Processing Systems 30 (NIPS 2017), 2017. 4066-4076.

[3] Judea Pearl 2009. Causality: Models, Reasoning, and Inference (2nd Edition), Cambridge, Cambridge University Press.

[4] Judea Pearl 2018. Theoretical Impediments to Machine Learning With Seven Sparks from the Causal Revolution. arXiv:1801.04016.

Extra Module – Testing and Evaluation of Machine Learning Algorithms

Keywords: performance, metrics, error, accuracy

Topic 01: Test data and training data quality

Topic 02: Performance measures (confusion matrix, ROC, AOC)

Topic 03: Hypothesis testing and estimating

Topic 04: Comparision of machine learning algorithms

Topic 05: “There-is-no-free-lunch” theorem

Topic 06: Measuring beyond accuracy (simplicity, scalability, interpretability, learnability, …)

Lecture slides 2×2 (12,756 kB): contact lecturer for slide set

Recommended Material:

https://github.com/josephmisiti/awesome-machine-learning

Extra Module – How to measure behavior and understanding

Keywords: performance, metrics, error, accuracy

Topic 01: Fundamentals to measure and evaluate human intelligence [1]

Topic 02: Low cost biometric technologies: 2D/3D cameras, eye-tracking, heart sensors

Topic 03: Advanced biometric technologies: EMG/ECG/EOG/PPG/GSR

Topic 04: Thinking-Aloud Technique

Topic 05: Microphone/Infrared sensor arrays

Topic 06: Affective computing: measuring emotion and stress [3]

Lecture slides 2×2 (17,111 kB): contact lecturer for slide set

Reading for students:

[1] José Hernández-Orallo 2017. The measure of all minds: evaluating natural and artificial intelligence, Cambridge University Press, doi:10.1017/9781316594179. Book Website: https://allminds.org

[2] Andrew T. Duchowski 2017. Eye tracking methodology: Theory and practice. Third Edition, Cham, Springer, doi:10.1007/978-3-319-57883-5.

[3] Christian Stickel, Martin Ebner, Silke Steinbach-Nordmann, Gig Searle & Andreas Holzinger 2009. Emotion Detection: Application of the Valence Arousal Space for Rapid Biological Usability Testing to Enhance Universal Access. In: Stephanidis, Constantine (ed.) Universal Access in Human-Computer Interaction. Addressing Diversity, Lecture Notes in Computer Science, LNCS 5614. Berlin, Heidelberg: Springer, pp. 615-624, doi:10.1007/978-3-642-02707-9_70.

Extra Module – The Theory of Explanations

Keywords: Explanation

Topic 01: What is a good explanation?

Topic 02: Explaining Explanations

Topic 03: The limits of explainability [2]

Topic 04: How to measure the value of an explanation

Topic 05: Practical Examples from the medical domain

Lecture slides 2×2 (5,914 kB): contact lecturer for slide set

Reading for students:

[1] Zachary C. Lipton 2016. The mythos of model interpretability. arXiv:1606.03490.

[2] https://www.media.mit.edu/articles/the-limits-of-explainability/

Extra Module – Material and Frameworks for Assignments (in no order and no preference)

A 01: LIME https://github.com/marcotcr/lime

A 02: LRP https://github.com/sebastian-lapuschkin/lrp_toolbox

A 03: TCAV https://github.com/tensorflow/tcav

A 04: DeepLIFT https://github.com/kundajelab/deeplift

A 05: SHAP https://github.com/slundberg/shap

A 06: AIX 360 https://github.com/IBM/AIX360

A 07: Activation Atlases https://github.com/tensorflow/lucid/#activation-atlas-notebooks

A 08: What If https://pair-code.github.io/what-if-tool/

Zoo of methods of explainable AI methods (incomplete, just as overview for the new students)

Note: This is just as a service for students to get a quick overview on some methods, the list is in alphabetical order, not prioritized and of course incomplete!

- BETA = Black Box Explanation through Transparent Approximation, a post-hoc explainability method developed by Lakkarju, Bach & Leskovec (2016) it learns two-level decision sets, where each rule explains the model behaviour. Himabindu Lakkaraju, Ece Kamar, Rich Caruana & Jure Leskovec 2017. Interpretable and Explorable Approximations of Black Box Models. arXiv:1707.01154.

- CPDA = Contextual Prediction Difference Analysis, extended PDA to be more stable against possible adversary artifacts (see Module 5)

- Deep Taylor Decomposition = works by running a backward pass on the network using a predefined set of rules and produces a decomposition of the neural network output on the input variables -which can then be visualized (see Module 05).

- GBP = Guided Back Propagation builds a saliency map by using gradients, similar to SA but negative gradients are clipped during the backpropagation, and therefore it concentrates the explanation on features having an excitatory (term from neural nets meaning excitation) effect on the output (see Module 08).

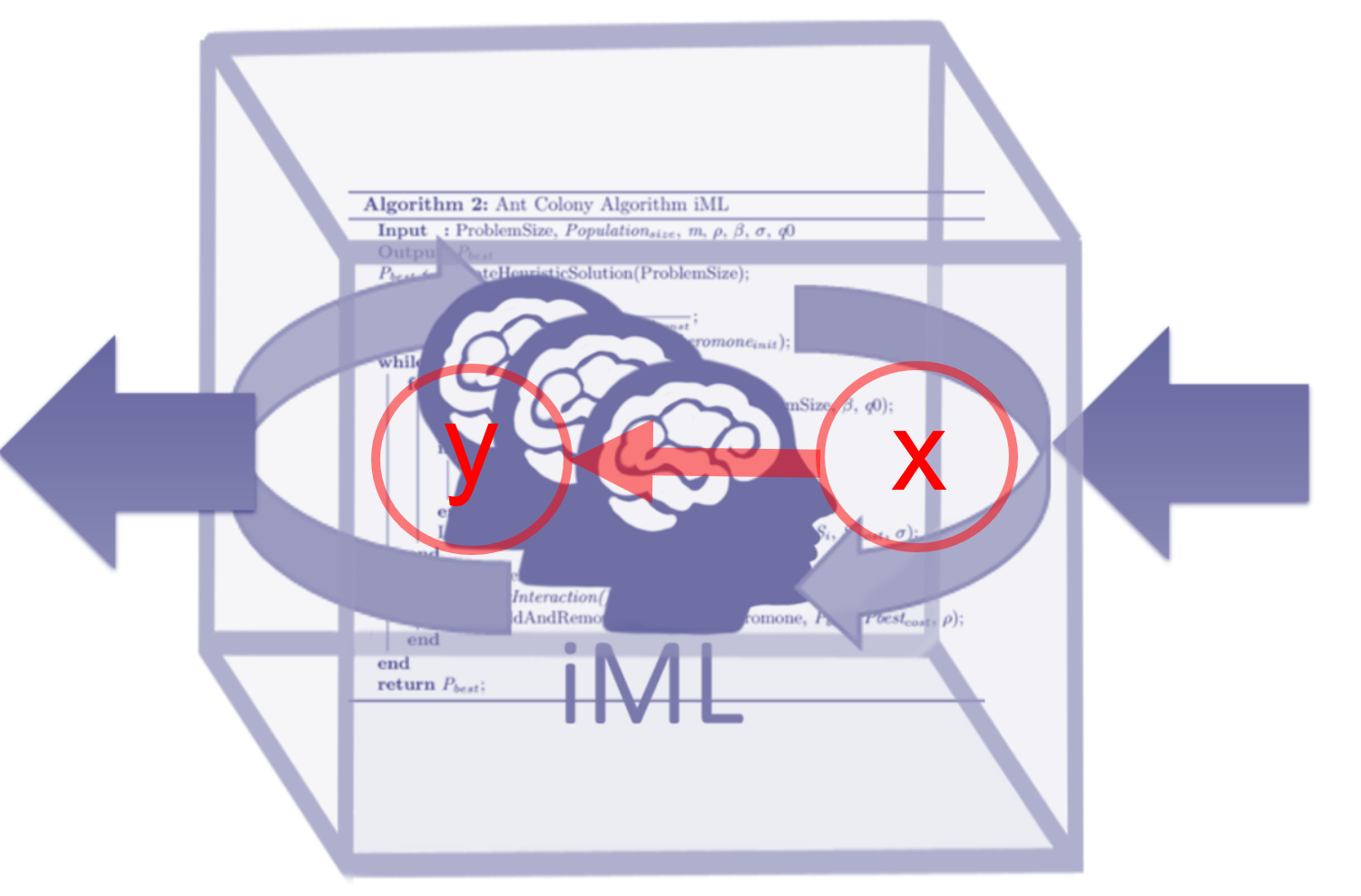

- iML = interactive Machine Learning with the Human-in-the-Loop is a versatile ante-hoc method for making use of the contextual understanding of a human expert complementing the machine learning alogrihtms; Andreas Holzinger et al. 2018. Interactive machine learning: experimental evidence for the human in the algorithmic loop. Springer/Nature Applied Intelligence, doi:10.1007/s10489-018-1361-5 (see Module 07).

- LIME = Local Interpretable Model-Agnostic Explanations is a universally useable tool; Marco Tulio Ribeiro, Sameer Singh & Carlos Guestrin 2016. Model-Agnostic Interpretability of Machine Learning. arXiv:1606.05386.

- LRP = Layer-wise Relevance Propagation is a post-hoc method most suitable for deep neural networks; Sebastian Bach, Alexander Binder, Grégoire Montavon, Frederick Klauschen, Klaus-Robert Müller & Wojciech Samek 2015. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS one, 10, (7), e0130140, doi:10.1371/journal.pone.0130140. NOTE: Sebastian BACH is now Sebastian LAPUSCHKIN

- Prediction Difference Analysis = the relevance of a feature xn can be estimated by the measurement of how a prediction changes – if the feature is unknown.

- SA = Sensitivity Analysis produces local explanations for the prediction of a differentiable function f by using a squared form of the gradient with regard to the input data – produces a saliency map (see Module 08).

- TCAV = Testing with Concept Activation Vectors, follows the idea that humans are working on high-level concepts (not on low-level features) and tries to find a global explanation which holds true for a class of interest which has been learnt concepts from examples (e.g. the concept of gender), this is highly important for social media where we get insight into how much a concept (e.g. gender, race, …) was relevant for a given result (see Module 06).

References from own work (references to related work will be given within the course):

[1] Andreas Holzinger, Chris Biemann, Constantinos S. Pattichis & Douglas B. Kell (2017). What do we need to build explainable AI systems for the medical domain? arXiv:1712.09923. https://arxiv.org/abs/1712.09923

[2] Andreas Holzinger, Bernd Malle, Peter Kieseberg, Peter M. Roth, Heimo Müller, Robert Reihs & Kurt Zatloukal (2017). Towards the Augmented Pathologist: Challenges of Explainable-AI in Digital Pathology. arXiv:1712.06657. https://arxiv.org/abs/1712.06657

[3] Andreas Holzinger, Markus Plass, Katharina Holzinger, Gloria Cerasela Crisan, Camelia-M. Pintea & Vasile Palade (2017). A glass-box interactive machine learning approach for solving NP-hard problems with the human-in-the-loop. arXiv:1708.01104.

[4] Andreas Holzinger (2016). Interactive Machine Learning for Health Informatics: When do we need the human-in-the-loop? Brain Informatics, 3, (2), 119-131, doi:10.1007/s40708-016-0042-6.

[5] Andreas Holzinger (2018). Explainable AI (ex-AI). Informatik-Spektrum, 41, (2), 138-143, doi:10.1007/s00287-018-1102-5.

https://link.springer.com/article/10.1007/s00287-018-1102-5

[6] Katharina Holzinger, Klaus Mak, Peter Kieseberg & Andreas Holzinger 2018. Can we trust Machine Learning Results? Artificial Intelligence in Safety-Critical decision Support. ERCIM News, 112, (1), 42-43.

https://ercim-news.ercim.eu/en112/r-i/can-we-trust-machine-learning-results-artificial-intelligence-in-safety-critical-decision-support

[7] Andreas Holzinger, et al. 2018. Interactive machine learning: experimental evidence for the human in the algorithmic loop. Applied Intelligence, doi:10.1007/s10489-018-1361-5.

Mini Student’s Glossary (incomplete, but hopefully helpful for the newcomer student):

Anchors = developed by Ribeiro et al. 2018, works similar as LIME but use rules to define explanations by local regions.

Ante-hoc Explainability (AHE) = such models are interpretable by design, e.g. glass-box approaches; typical examples include linear regression, decision trees/lists, random forests, Naive Bayes and fuzzy inference systems; or GAMs, Stochastic AOGs, and deep symbolic networks; they have a long tradition and can be designed from expert knowledge or from data and are useful as framework for the interaction between human knowledge and hidden knowledge in the data.

BETA = Black Box Explanation through Transparent Approximation, developed by Lakkarju, Bach & Leskovec (2016) it learns two-level decision sets, where each rule explains the model behaviour; this is an increasing problem in daily use of AI/ML, see e.g. http://news.mit.edu/2019/better-fact-checking-fake-news-1017

Bias = inability for a ML method to represent the true relationship; High bias can cause an algorithm to miss the relevant relations between features and target outputs (underfitting);

Causability = is a property of a human (natural intelligence) and a measurement for the degree of human understanding; we have developed the System Causability Scale (SCS).

Counterfactual = a hypothesis that is contrary to the facts (similar to counterexample), or a hypothetical state of the world, used to assess the impact of an action in the real-world, or a conditional statement in which the conditional clause is false, as “what-if” – this is very important for future human-ai interfaces

Counterexample = an exception of a proposed general rule or law and appears as an example which disproves a general statement made.

Decomposition = process of resolving relationships into the consituent components (hopefully representing the relevant interest). Highly theoretical, because in real-world this is hard due to the complexity (e.g. noise) and untraceable imponderabilities on our observations.

Deduction = deriving of a conclusion by reasoning

Explainability = motivated by the opaqueness of so called “black-box” approaches it is the ability to provide an explanation on why a machine decision has been reached (e.g. why is it a cat what the deep network recognized). Finding an appropriate explanation is difficult, because this needs understanding the context and providing a description of causality and consequences of a given fact. (German: Erklärbarkeit; siehe auch: Verstehbarkeit, Nachvollziehbarkeit, Zurückverfolgbarkeit, Transparenz)

Explanation = set of statements to describe a given set of facts to clarify causality, context and consequences thereof and is a core topic of knowledge discovery involving “why” questionss (“Why is this a cat?”). (German: Erklärung, Begründung)

Explanatory power = is the ability of a set hypothesis to effectively explain the subject matter it pertains to (opposite: explanatory impotence).

Explicit Knowledge = you can easy explain it by articulating it via natural language etc. and share it with others.

European General Data Protection Regulation (EU GDPR) = Regulation EU 2016/679 – see the EUR-Lex 32016R0679 , will make black-box approaches difficult to use, because they often are not able to explain why a decision has been made (see explainable AI).

Feature Space = the n-dimensions of the feature variables extracted by ML excluding the target function. The dimension n is determined by the number of feature parameters.

Gaussian Process (GP) = collection of stochastic variables indexed by time or space so that each of them constitute a multidimensional Gaussian distribution; provides a probabilistic approach to learning in kernel machines (See: Carl Edward Rasmussen & Christopher K.I. Williams 2006. Gaussian processes for machine learning, Cambridge (MA), MIT Press); this can be used for explanations. (see also: Visual Exploration Gaussian)

Gradient = a vector providing the direction of maximum rate of change.

Ground truth = generally information provided by direct observation (i.e. empirical evidence) instead of provided by inference. For us it is the gold standard, i.e. the ideal expected result (100 % true);

Interactive Machine Learning (iML) = machine learning algorithms which can interact with – partly human – agents and can optimize its learning behaviour trough this interaction. Holzinger, A. 2016. Interactive Machine Learning for Health Informatics: When do we need the human-in-the-loop? Brain Informatics (BRIN), 3, (2), 119-131.

Inverse Probability = an older term for the probability distribution of an unobserved variable, and was described by De Morgan 1837, in reference to Laplace’s (1774) method of probability.

Implicit Knowledge = very hard to articulate, we do it but cannot explain it (also tacit knowlege).

In-Vitro Diagnostic medical devices directive (IVDR) = with effect on May, 26, 2022 this will also need re-traceability and interpretability for AI-assisted medical applications [see: EUR LEX IVDR] in-vitro = “in the glass”, studies performed with microorganisms, cells, or biological molecules outside their normal biological context; in-vivo = “within live” – as opposed to a tissue extract; in-silico = performed via computer simulation; in-situ = “real-world” context.

KANDINSKY-Patterns = “a swiss knife for the study of explainability” – see https://www.youtube.com/watch?v=UuiV0icAlRs

Kernel = class of algorithms for pattern analysis e.g. support vector machine (SVM); very useful for explainable AI.

Kernel trick = transforming data into another dimension that has a clear dividing margin between the classes.

LORE = LOcal Rule-based Explanations by Pastor & Baralis, 2019, use a genetic algorithm to identify most important features.

Multi-Agent Systems (MAS) = include collections of several independent agents, could also be a mixture of computer agents and human agents. An exellent pointer of the later one is: Jennings, N. R., Moreau, L., Nicholson, D., Ramchurn, S. D., Roberts, S., Rodden, T. & Rogers, A. 2014. On human-agent collectives. Communications of the ACM, 80-88.

Post-hoc Explainability (PHE) = such models are designed for interpreting black-box models and provide local explanations for a specific decision and re-enact on request, typical examples include LIME, BETA, LRP, or Local Gradient Explanation Vectors, prediction decomposition or simply feature selection.

Preference learning (PL) = concerns problems in learning to rank, i.e. learning a predictive preference model from observed preference information, e.g. with label ranking, instance ranking, or object ranking. Fürnkranz, J., Hüllermeier, E., Cheng, W. & Park, S.-H. 2012. Preference-based reinforcement learning: a formal framework and a policy iteration algorithm. Machine Learning, 89, (1-2), 123-156.

Saliency map = image showing in a different representation (usually easier for human perception) each pixel’s quality.

Tacit Knowledge = Knowledge gained from personal experience that is even more difficult to express than implicite knowledge.

TCAV = Testing with Concept Activation Vectors developed by the Google Group around Kim et al. (2017) automatically creates a list of human understandable concepts (concept learning).

Transfer Learning (TL) = The ability of an algorithm to recognize and apply knowledge and skills learned in previous tasks to novel tasks or new domains, which share some commonality. Central question: Given a target task, how do we identify the commonality between the task and previous tasks, and transfer the knowledge from the previous tasks to the target one?

Pan, S. J. & Yang, Q. 2010. A Survey on Transfer Learning. IEEE Transactions on Knowledge and Data Engineering, 22, (10), 1345-1359, doi:10.1109/tkde.2009.191.

Zero-shot learning = we see this as an extreme case of contextual domain adaptation.

Pointers (incomplete – but hopefully a good start for the newcomer):

- Coming Event: Deadline: March, 29, 2020, xAI Workshop at the ARES/CD-MAKE 2020 conference, University College Dublin, August 26-29, 2020

https://human-centered.ai/explainable-ai-2020/ - Past Event: MAKE-Explainable AI (MAKE-exAI) workshop on explainable Artificial Intelligence

at the CD-MAKE 2019 conference, Canterbury, Kent (UK), August 27-30, 2019

https://cd-make-2019.archive.sba-research.org/ - Past Event: Deadline: July, 2, 2018: 2nd International Workshop on Interactive Adaptive Learning (IAL2018), Co-Located With The European Conference on Machine Learning and Principles and Practice of Knowledge Discovery (ECML PKDD 2018),10-14 September 2018 – Dublin (Ireland)

https://www.ies.uni-kassel.de/p/ial2018/index.html - Past Event: Deadline: June,11, 2018: Workshop on Interpretability of Machine Intelligence in Medical Image Computing

at MICCAI 2018, September, 16, 2018

https://imimic.bitbucket.io - Past Event: Deadline: May, 7, 2018, MAKE-Explainable AI (MAKE-exAI) workshop on explainable Artificial Intelligence

at the CD-MAKE 2018 conference, Hamburg, August 27-30, 2018

https://cd-make.net/special-sessions/make-explainable-ai - Past Event: NIPS 2017 Symposium “Interpretable Machine Learning” (December, 7, 2017): https://interpretable.ml/

https://arxiv.org/html/1711.09889

organized by Andrew G. WILSON, Cornell University; Jason YOSINSKI, Uber AI Labs; Patrice SIMARD, Microsoft Research; Rich CARUANA, Cornell University, William HERLANDS, Carnegie Mellon University

Selection of important People relevant for the field (incomplete, ordered by historic timeline)

BAYES, Thomas (1702-1761) gave a straightforward definition of probability [Wikipedia]

HUME, David (1711-1776) stated that causation is a matter of perception; he argued that inductive reasoning and belief in causality cannot be justified rationally; our trust in causality and induction results from custom and mental habit [Wikipedia]

PRICE, Richard (1723-1791) edited and commented the work of Thomas Bayes in 1763 [Wikipedia]

LAPLACE, Pierre-Simon, Marquis de (1749-1827) developed the Bayesian interpretation of probability [Wikipedia]

GAUSS, Carl Friedrich (1777-1855) with contributions from Laplace derives the normal distribution (Gauss bell curve) [Wikipedia]

MARKOV, Andrey (1856-1922) worked on stochastic processes and on what is known now as Markov Chains. [Wikipedia]

TUKEY, John Wilder (1915-2000) suggested in 1962 together with Frederick Mosteller the name “data analysis” for computational statistical sciences, which became much later the name data science [Wikipedia]

PEARL, Judea (1936 – ) is the Turing Awardee for pionieering in Bayesian networks and probabilistic approaches to AI [Wikipedia]

Antonyms (incomplete – but hopefully helpful for new students)

- ante-hoc <> post-hoc

- biased <> unbiased

- big data sets <> small data sets

- certain <> uncertain

- correlation <> causality

- comprehensible <> incomprehensible

- confident <> doubtful

- discriminative <> generative

- discriminatory <> nondiscriminatory

- easy <> difficult

- ethical <> unethical

- excitatory <> inhibitory

- explainable <> obscure

- explicable <> inexplicable

- Frequentist <> Bayesian

- faithful <> faithless

- fair <> unfair

- fidelity <> infidelity

- fluctuating <> constant

- glass box <> black box

- Independent identical distributed data (IID-Data) <>non independent identical distributed data (non-IID, real-world data)

- intelligible <> unintelligible

- interactive <> autonomous

- interpretable <> uninterpretable

- legal <> illegal

- legitimate <> illegitimate

- local <> global

- low dimensional <> high dimensional

- minimun <> maximum

- objective <> subjective

- parsimonous <> abundant

- parametric <> non-parametric

- predictable <> unpredictable

- reliable <> unreliable

- reasonable <> unreasonable

- repeatable <> unrepeatable

- replicable <> not replicable

- re-traceable <> untraceable

- significant <> negligible

- specific <> agnostic

- supervised <> unsupervised

- sure <> unsure

- transparent <> opaque

- trustworthy <> untrustworthy#

- truth <> untruth

- trueness <> falseness

- truthful <> untruthful

- underfitting <> overfitting

- valid <> invalid

- verity <> falsity

- verifiable <> non-verifiable (undetectable)

- zero-shot <> data-driven

Zusammenfassung für deutschsprachige Studierende:

Künstliche Intelligenz (KI) und maschinelles Lernen (ML) ermöglichen heute automatisierte Problemlösungen ohne menschliches Zutun. Dabei ist die Klasse der “Deep Learning” (DL) Algorithmen erstaunlich erfolgreich. Verblüffende Ergebnisse werden erzielt in verschiedensten Anwendungsbereichen, vom autonomen Fahren bis zur Textübersetzung (siehe aktuell DeepL). Im medizinischen Bereich allerdings ist es notwendig, dass Fachexperten verstehen können, warum ein Algorithmus zu einem bestimmten Ergebnis gekommen ist. Folglich gewinnt der Bereich der Nachvollziehbarkeit, Interpretierbarkeit und Erklärbarkeit Interesse, das im aufstrebenden Fach “Explainable AI (xAI) zusammengefasst wird.

xAI untersucht die Transparenz und Nachvollziehbarkeit opaker Ansätze von KI/ML und es gibt bereits eine Vielzahl von Methoden. Beispielsweise können mit “Layerwise Relevance Propagation” die relevanten Teile von Eingaben in ein neuronales Netz und Darstellungen in einem neuronalen Netz, die ein Ergebnis verursacht haben vermittels einer “Heatmap” hervorgehoben werden. Dies ist ein erster wichtiger Schritt, um sicherzustellen, dass die Endbenutzerinnen und Endbenutzer die Verantwortung für die KI-unterstützte Entscheidungsfindung übernehmen (können). Interaktives maschinelles Lernen (iML) fügt diesen Prozessen die Komponente der menschlichen Expertise hinzu, indem sie es ihnen ermöglicht, die Ergebnisse nachzuvollziehen und zu verstehen (z.B. Plausibilitätsprüfungen). Zusammenfassend lässt sich sagen, dass Explainable AI sich mit der Implementierung von Transparenz und Nachvollziehbarkeit statistischer Black-Box-Maschinenlernmethoden befasst, insbesondere mit dem Deep-Learning (DL), jedoch besteht im medizinischen Bereich die Notwendigkeit, über Explainable AI hinauszugehen. Um ein Niveau “erklärbarer Medizin” zu erreichen, brauchen wir Causability. So wie Usability Messungen für die Qualität der Nutzung umfasst, so umfasst die Causability Messungen für die Qualität der Erklärungen. Siehe dazu folgende deutschsprachige Artikel:

https://link.springer.com/article/10.1365/s40702-020-00586-y

https://link.springer.com/article/10.1007%2Fs00287-018-1102-5